Earlier this year, Stone Temple Consulting released a virtual assistant consumer survey showing the majority of respondents wanted the assistants to provide “answers” rather than conventional search results. Today, the firm published a follow-up study that measured the relative accuracy of the four major assistants.

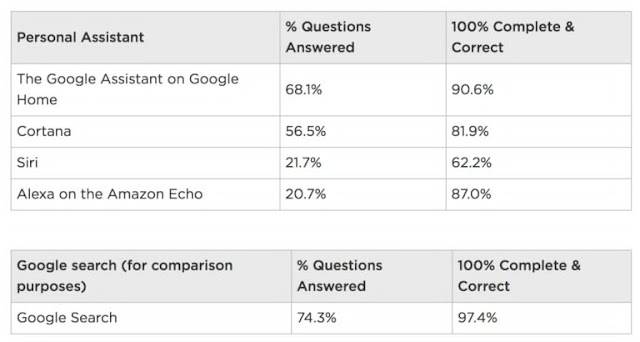

It compared results of “5,000 different questions about everyday factual knowledge” on Google Home, Alexa, Siri and Cortana, using traditional Google search results as a baseline for accuracy. The following table shows the study’s top-line results.

As one might have anticipated, the Google Assistant answered more questions and was correct more often than its rivals. Cortana came in second, followed by Siri and Alexa. Of the questions it could answer, Amazon’s Alexa was the second most accurate assistant. Siri had the highest percentage of wrong answers of the four competitors.

One of the interesting observations in the report is about featured snippets. Cortana had more featured snippets integrated than any of the others, even Google Home, although Google search had more. Siri and Alexa lagged far behind in the category, although they want to use third parties to deliver “answers” and transactional capabilities.

It compared results of “5,000 different questions about everyday factual knowledge” on Google Home, Alexa, Siri and Cortana, using traditional Google search results as a baseline for accuracy. The following table shows the study’s top-line results.

As one might have anticipated, the Google Assistant answered more questions and was correct more often than its rivals. Cortana came in second, followed by Siri and Alexa. Of the questions it could answer, Amazon’s Alexa was the second most accurate assistant. Siri had the highest percentage of wrong answers of the four competitors.

One of the interesting observations in the report is about featured snippets. Cortana had more featured snippets integrated than any of the others, even Google Home, although Google search had more. Siri and Alexa lagged far behind in the category, although they want to use third parties to deliver “answers” and transactional capabilities.

Great information, better still to find out your blog that has a great job. Nicely done virtual assistant tasks

ReplyDelete